A few years ago, autocompleting a paragraph of code felt like magic. Today, coding agents scaffold, write, debug, and refactor entire applications with little supervision. What changed? Two forces converged. First, frontier language models learned to use tools. Second, we learned to give them the right context. The first matters, but the second determines whether agents actually complete work successfully. Even the best large language models are effective only when they have relevant, timely, and complete context about the environment they operate in.

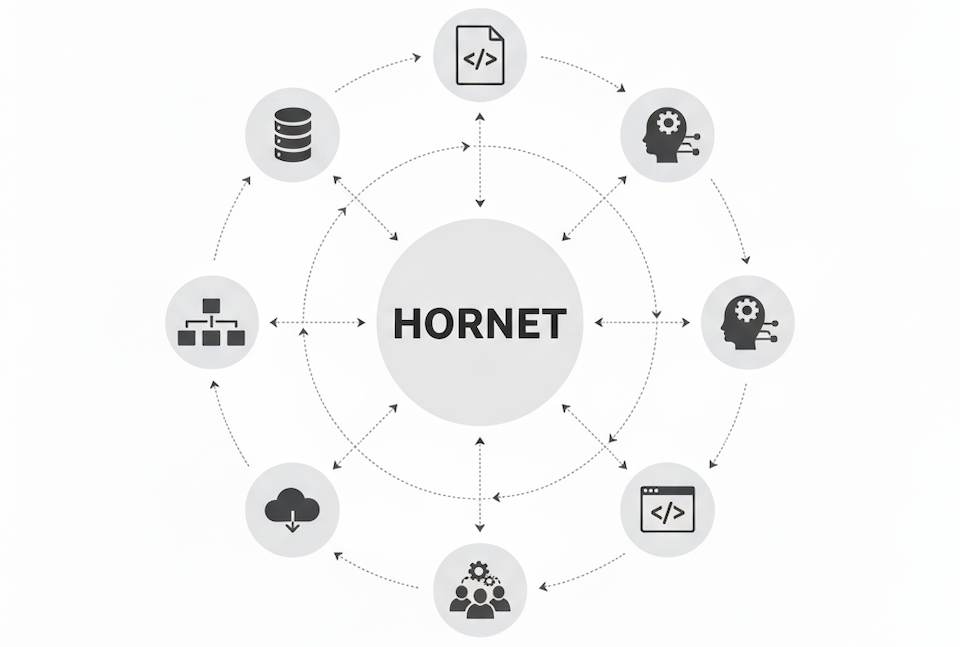

At Hornet, we are building the infrastructure that supplies that context: a retrieval engine designed for agents.

What is an agent?

An agent is a system driven by a language model that uses tools in a loop to achieve a goal. Tools serve as the agent’s hands and eyes, allowing it to read data, call APIs, write to systems, and check its work.

What is a retrieval engine?

A retrieval engine is the backbone that powers advanced search across any data. We use “retrieval engine” rather than “search engine” because the scope is broader. It serves text, code, images, and structured records. It can power a consumer search experience, an internal knowledge system, a coding assistant, or a research agent.

Why retrieval matters for agents

The shift to agentic systems is already visible in software development. Teams using tools such as Claude Code, Codex, and Cursor have shown a repeatable pattern: give an agent the right tools and access to a codebase, and it becomes a productive developer. Like a human, it must browse and search to understand architecture and dependencies, but operates at machine speed.

This pattern is spreading to the enterprises; market research, financial analysis, and customer support. To be effective at reaching their goals, agents need relevant context: policies, product data, code, knowledge bases, and the language of the domain. A key lesson from the year of agents is simple. Plugging in an LLM rarely leads to reliable work outcomes on its own. Agents need a context environment that is accurate, fresh, and available in real time.

Agents and the future of search

The search box is not going away. It is gaining a teammate: a search agent that sits between human intent and the data in Hornet. On the public internet side, companies such as Perplexity, Exa, Tavily, and Parallel provide live web access. On the private side, coding and enterprise agents require deep retrieval into codebases and firewalled documents. These domains differ, but they share a foundational requirement: Relevance. Agents need relevant context to get work done. Forcing the agent to attend to irrelevant context wastes tokens and reduces the chance of reaching the goal successfully. This is the layer where we operate. Our goal is not to create a single vertical search application. Our goal is to provide the horizontal engine that can power most of them efficiently. The same engine can index the public web for a research product or give a coding agent secure access to a proprietary repository. Relevance is the horizontal challenge.

Agents and the future of infrastructure

Agent-driven workloads change the shape of demand. A human analyst might issue a few dozen queries in a session. An autonomous agent can issue thousands in rapid succession while it tests hypotheses, retrieves evidence, and verifies results. We are witnessing a shift in infrastructure toward systems designed specifically to support the demanding patterns of agentic workloads, rather than adapting legacy platforms built for humans. Meeting this demand for search workloads requires a multi-tool retrieval system. It must provide exact, case-sensitive matches for code, broad semantic search for concepts, and queries that span structured and unstructured data at once. Legacy systems were designed for human pacing. They struggle with high concurrency, volatile and diverse query mixes, and continuous indexing. Agents require retrieval infrastructure that is built for their query usage patterns. We are engineering an engine for a future where agent-driven queries vastly outpace human ones.

Building the engine for the agentic era

The agentic shift creates a new foundational layer in the stack: a retrieval engine that matches the speed, volume, and variability of agentic workloads. Whether the target is the public web or a private knowledge base, the core retrieval challenge is the same: deliver relevant context to the task at hand.

Hornet is that foundational engine. We provide a universal toolkit for developers and agents to build world-class relevant retrieval for any application; from web-scale research to coding assistants. Our vision goes one step further. Agents will not only query for information at previously unimaginable scale. They will soon configure, operate, and improve the systems that supply their context. By making advanced retrieval techniques accessible and programmable, we enable more teams to build reliable agentic systems.

If you are building with agents and need retrieval infrastructure that keeps up, we are ready to help.